Consul Service Mesh

Note: Nomad's service mesh integration requires Linux network namespaces. Consul service mesh will not run on Windows or macOS.

Consul service mesh provides service-to-service connection authorization and encryption using mutual Transport Layer Security (TLS). Applications can use sidecar proxies in a service mesh configuration to automatically establish TLS connections for inbound and outbound connections without being aware of the service mesh at all.

Nomad with Consul Service Mesh Integration

Nomad integrates with Consul to provide secure service-to-service communication between Nomad jobs and task groups. To support Consul service mesh, Nomad adds a new networking mode for jobs that enables tasks in the same task group to share their networking stack. With a few changes to the job specification, job authors can opt into service mesh integration. When service mesh is enabled, Nomad will launch a proxy alongside the application in the job file. The proxy (Envoy) provides secure communication with other applications in the cluster.

Nomad job specification authors can use Nomad's Consul service mesh integration to implement service segmentation in a microservice architecture running in public clouds without having to directly manage TLS certificates. This is transparent to job specification authors as security features in service mesh continue to work even as the application scales up or down or gets rescheduled by Nomad.

For using the Consul service mesh integration with Consul ACLs enabled, see the Secure Nomad Jobs with Consul Service Mesh guide.

Nomad Consul Service Mesh Example

The following section walks through an example to enable secure communication between a web dashboard and a backend counting service. The web dashboard and the counting service are managed by Nomad. Nomad additionally configures Envoy proxies to run along side these applications. The dashboard is configured to connect to the counting service via localhost on port 9001. The proxy is managed by Nomad, and handles mTLS communication to the counting service.

Prerequisites

Consul

The Consul service mesh integration with Nomad requires Consul 1.6 or later. The Consul agent can be run in dev mode with the following command:

Note: Nomad's Consul service mesh integration requires Consul in your $PATH

To use service mesh on a non-dev Consul agent, you will minimally need to enable the

GRPC port and set connect to enabled by adding some additional information to

your Consul client configurations, depending on format. Consul agents running TLS

and a version greater than 1.14.0

should set the grpc_tls configuration parameter instead of grpc. Please see

the Consul port documentation for further reference material.

For HCL configurations:

For JSON configurations:

Consul TLS

Note: Consul 1.14+ made a backwards incompatible change

in how TLS enabled grpc listeners work. When using Consul 1.14 with TLS enabled users

will need to specify additional Nomad agent configuration to work with Connect. The

consul.grpc_ca_file value must now be configured (introduced in Nomad 1.4.4),

and consul.grpc_address will most likely need to be set to use the new standard

grpc_tls port of 8503.

Consul ACLs

Note: Starting in Nomad v1.3.0, Consul Service Identity ACL tokens automatically

generated by Nomad on behalf of Connect enabled services are now created in Local

rather than Global scope, and are no longer replicated globally.

To facilitate cross-Consul datacenter requests of Connect services registered by Nomad, Consul agents will need to be configured with default anonymous ACL tokens with ACL policies of sufficient permissions to read service and node metadata pertaining to those requests. This mechanism is described in Consul #7414. A typical Consul agent anonymous token may contain an ACL policy such as:

Transparent Proxy

Using Nomad's support for transparent proxy configures the task group's

network namespace so that traffic flows through the Envoy proxy. When the

transparent_proxy block is enabled:

- Nomad will invoke the

consul-cniCNI plugin to configureiptablesrules in the network namespace to force outbound traffic from an allocation to flow through the proxy. - If the local Consul agent is serving DNS, Nomad will set the IP address of the

Consul agent as the nameserver in the task's

/etc/resolv.conf. - Consul will provide a virtual IP for any upstream service the workload has access to, based on the service intentions.

Using transparent proxy has several important requirements:

- You must have the

consul-cniCNI plugin installed on the client host along with the usual required CNI plugins. - To use Consul DNS and virtual IPs, you will need to configure Consul's DNS

listener to be exposed to the workload network namespace. You can do this

without exposing the Consul agent on a public IP by setting the Consul

bind_addrto bind on a private IP address (the default is to use theclient_addr). - The Consul agent must be configured with

recursorsif you want allocations to make DNS queries for applications outside the service mesh. - You cannot set a

network.dnsblock on the allocation (unless you setno_dns, see below).

For example, a HCL configuration with a go-sockaddr/template binding to the

subnet 10.37.105.0/20, with recursive DNS set to OpenDNS nameservers:

Nomad

Nomad must schedule onto a routable interface in order for the proxies to connect to each other. The following steps show how to start a Nomad dev agent configured for Consul service mesh.

CNI Plugins

Nomad uses CNI reference plugins to configure the network namespace used to secure the Consul service mesh sidecar proxy. All Nomad client nodes using network namespaces must have these CNI plugins installed.

To use transparent_proxy mode, Nomad client nodes will also need the

consul-cni plugin installed.

Run the Service Mesh-enabled Services

Once Nomad and Consul are running, with Consul DNS enabled for transparent proxy

mode as described above, submit the following service mesh-enabled services to

Nomad by copying the HCL into a file named servicemesh.nomad.hcl and running:

nomad job run servicemesh.nomad.hcl

The job contains two task groups: an API service and a web frontend.

API Service

The API service is defined as a task group with a bridge network:

Since the API service is only accessible via Consul service mesh, it does not

define any ports in its network. The connect block enables the service mesh

and the transparent_proxy block ensures that the service will be reachable via

a virtual IP address when used with Consul DNS.

The port in the service block is the port the API service listens on. The

Envoy proxy will automatically route traffic to that port inside the network

namespace. Note that currently this cannot be a named port; it must be a

hard-coded port value. See GH-9907.

Web Frontend

The web frontend is defined as a task group with a bridge network and a static forwarded port:

The static = 9002 parameter requests the Nomad scheduler reserve port 9002 on

a host network interface. The to = 9002 parameter forwards that host port to

port 9002 inside the network namespace.

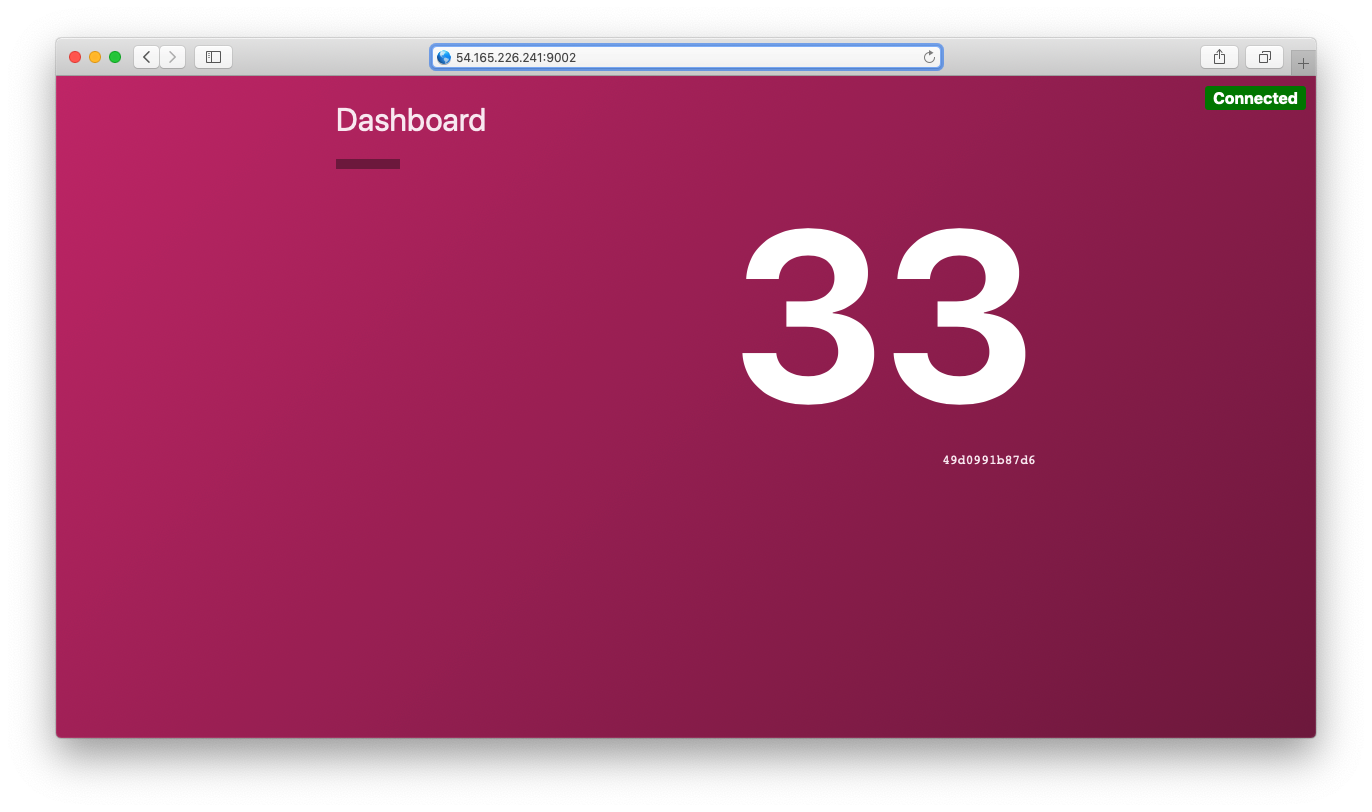

This allows you to connect to the web frontend in a browser by visiting

http://<host_ip>:9002 as show below:

The web frontend connects to the API service via Consul service mesh.

The connect block with transparent_proxy configures the web frontend's

network namespace to route all access to the count-api service through the

Envoy proxy.

The web frontend is configured to communicate with the API service with an

environment variable $COUNTING_SERVICE_URL:

The transparent_proxy block ensures that DNS queries are made to Consul so

that the count-api.virtual.consul name resolves to a virtual IP address. Note

that you don't need to specify a port number because the virtual IP will only be

directed to the correct service port.

Manually Configured Upstreams

You can also use Connect without Consul DNS and transparent_proxy mode. This

approach is not recommended because it requires duplicating service intention

information in an upstreams block in the Nomad job specification. But Consul

DNS is not protected by ACLs, so you might want to do this if you don't want to

expose Consul DNS to untrusted workloads.

In that case, you can add upstream blocks to the job spec. You don't need the

transparent_proxy block for the count-api service:

But you'll need to add an upstreams block to the count-dashboard service:

The upstreams block defines the remote service to access (count-api) and

what port to expose that service on inside the network namespace (8080).

The web frontend will also need to use an environment variable to communicate with the API service:

This environment variable value gets interpolated with the upstream's

address. Note that dashes (-) are converted to underscores (_) in

environment variables so count-api becomes count_api.

Limitations

- The minimum Consul version to use Connect with Nomad is Consul v1.8.0.

- The

consulbinary must be present in Nomad's$PATHto run the Envoy proxy sidecar on client nodes. - Consul service mesh using network namespaces is only supported on Linux.

- Prior to Consul 1.9, the Envoy sidecar proxy will drop and stop accepting connections while the Nomad agent is restarting.

Troubleshooting

If the sidecar service is not running correctly, you can investigate

potential envoy failures in the following ways:

- Task logs in the associated

connect-*task - Task secrets (may contain sensitive information):

- envoy CLI command:

secrets/.envoy_bootstrap.cmd - environment variables:

secrets/.envoy_bootstrap.env

- envoy CLI command:

- An extra Allocation log file:

alloc/logs/envoy_bootstrap.stderr.0

For example, with an allocation ID starting with b36a:

Note: If the alloc is unable to start successfully, debugging files may only be accessible from the host filesystem. However, the sidecar task secrets directory may not be available in systems where it is mounted in a temporary filesystem.